Google’s AI Hype Circle

We have to do Bard because everyone else is doing AI; everyone else is doing AI because we’re doing Bard.

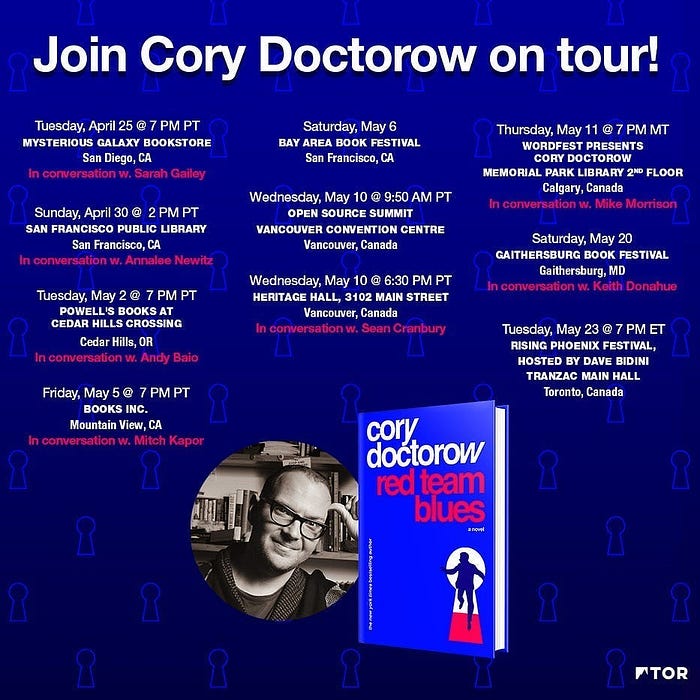

Next Saturday (May 20), I’ll be at the GAITHERSBURG Book Festival with my novel Red Team Blues; then on May 22, I’m keynoting Public Knowledge’s Emerging Tech conference in DC.

On May 23, I’ll be in TORONTO for a book launch that’s part of WEPFest, a benefit for the West End Phoenix, onstage with Dave Bidini (The Rheostatics), Ron Diebert (Citizen Lab) and the whistleblower Dr Nancy Olivieri.

The thing is, there really is an important area of AI research for Google, namely, “How do we keep AI nonsense out of search results?”

Google’s search quality has been in steady decline for years. I blame the company’s own success. When you’ve got more than 90 percent of the market, you’re not gonna grow by attracting more customers — your growth can only come from getting a larger slice of the pie, at the expense of your customers, business users and advertisers.

Google’s product managers need that growth. For one thing, the company spends $45 billion every year to bribe companies like LG, Samsung, Motorola, LG and Apple to be their search default. That is to say, they’re spending enough to buy an entire Twitter, every single year, just to stay in the same place.

More personally, each product manager’s bonus — worth several multiples of their base salary — depends on showing growth in their own domain. That means that each product manager’s net worth is tied up in schemes to shift value from Google’s users and customers to Google. That’s how we get a Google search-results page that is dominated by ads (including an increasing proportion of malvertising and scamvertising), SEO spam, and “knowledge panels” of dubious quality.

All of this has weakened Google’s own ranking system to the point where a link’s presence among the top results for a Google search is correlated with scamminess, not relevance. This is a vicious cycle: the worse Google’s search results are, the more they depend on being the default search (because no one would actively choose a search engine with unreliable, spammy results). The more they spend on being the default, the sweatier and more desperate their money-grabbing tactics become, and the worse the search results get, and the more they need to spend to keep you locked in.

The decline of Google search is a big deal. Google may not have been able to create a viable video service, or a social network, or even an RSS reader. They may waste fortunes on “moonshot” boondoggles like “smart cities” and “self-driving cars.” They might fill an entire graveyard with failed initiatives. Google may depend on buying up other peoples’ companies to get an ad-tech stack, a mobile operating system, a video service, a documents system, a maps tool, and every other successful Google product (with the exception of the Hotmail clone and the browser built on discarded Safari code).

The company might be a serial purchaser masquerading as Willy Wonka’s idea-factory — but at least Google was unequivocally Good At Search. Until they weren’t.

Indeed, it’s not clear if anyone can be good enough at search to be the world’s only search-engine. The fact that we all use the same search-engine to find our way around the internet means that anyone who can figure out how to game the search-engine’s ranking algorithm has a literally unlimited pool of potential customers who will pay for “search engine optimization,” in hopes of landing on the first page.

For decades, Google has refused to publish detailed descriptions of its algorithm’s ranking metrics, insisting that doing so would only abet the SEO industry. “Security through obscurity” is widely considered to be a fool’s errand, but somehow, the entire infosec world gave Google a pass when it said, “We could tell you how search works — but then we’d have to kill you.”

Having to build a search-ranking algorithm that can withstand relevancy attacks funded by every legitimate business, every scammer, every influencer, every publisher, every religion and every cause on the entire planet Earth might just be an unattainable goal. As Elizabeth Lopatto put it:

[The] obvious degradation of Google Search [was]caused in part by Google’s own success: whole search engine optimization teams have been built to make sure websites show on the first page of search since most people never click through to the second. And there’s been a rise of SEO-bait garbage that surfaces first.

Search was Google’s crown jewel, its sole claim to excellence in innovation. Sure, the company is unparalleled in its ability to operationalize and scale up other peoples’ ideas, but that’s just another way of saying, “Google is a successful monopolist.” Since the age of the rail barons, being good at running other peoples’ companies has been a prerequisite for monopoly success. But administering other peoples’ great ideas isn’t the same thing as coming up with your own.

With search circling the drain, Google’s shareholders are losing confidence. In the past year, the company caved to “activist investors,” laying off 12,000 engineers after a stock buyback that would have paid all 12,000 salaries for the next 27 years.

Nominally, Google is insulated from its investors’ whims. The company’s founders, Sergey Brin and Larry Page, may have sold off the majority of their company’s equity, but they retained a majority of the votes, writing:

If opportunities arise that might cause us to sacrifice short term results but are in the best long term interest of our shareholders, we will take those opportunities. We will have the fortitude to do this. We would request that our shareholders take the long term view.

But while owning a majority of the voting shares insulates the founders from direct corporate governance challenges, it doesn’t insulate them from mass stock-selloffs, which jeopardize their own net worth, as well as the company’s ability to attract and retain top engineers and executives, who join the company banking on massive stock grants that will go up in value forever. If Google’s stock is no longer a sure thing, then it will have to hire key staff with money it could use for other stuff, not the stock it issues itself, for free.

So Google finds itself on the horns of a dilemma.

- It can try to fix search, spending a fortune making search better, even as the web is flooded with text generated by “AI” chatbots. If it does that, shareholders will continue to punish the company, making it more expensive to hire the kinds of engineers who can help weed out spam.

- It can chase the “AI” hype cycle, blowing up its share-price even as its search quality declines. Maybe it will invent a useful “AI” application that yields another quarter-century of monopoly, but maybe not. After all, it’s much easier to launch a competing “AI” company than it is to launch a competing search company.

The company chose number two. Of course they did.

This does not bode well. “AI” tools can do some useful things, to be sure. A friend recently told me about how large language models can help debug notoriously frustrating CSS bugs, saving them a significant amount of time.

That’s great! It’s a really cool next step on the long road that developer tools have traveled, tools that automatically catch errors and suggest fixes, from mismatched quotes to potentially deadly memory errors. There’ve been a million of these tools, and each one has made programmers more productive, and, more importantly, has expanded the pool of people who can write code.

But no one ever looked at a new error-checking feature in a compiler and announced that programmers were obsolete, or that they were going to cancel 7,800 planned hires on the strength of a new tool.

The entire case for “AI” as a disruptive tool worth trillions of dollars is grounded in the idea that chatbots and image-generators will let bosses fire hundred of thousands or even millions of workers.

That’s it.

But the case for replacing workers with shell-scripts is thin indeed. Say that the wild boasts of image-generator companies come true and every single commercial illustrator can be fired. That would be ghastly and unjust, but commercial illustrators are already a tiny, precarious, grotesquely underpaid workforce who are exploited with impunity thanks to a mix of “vocational awe” and poor opportunities in other fields.

Take the wages of every single illustrator, throw in the wages of every single rote copywriter churning out press-releases and catalog copy, add a half-dozen more trades, and multiply it by two orders of magnitude, and it still doesn’t add up to the “six trillion dollar opportunity” Morganstanley has promised us.

We’ve seen this movie before. It was just five years ago that we were promised that self-driving technology was about to make truck-driving obsolete. The scare-stories warned us that this would be a vast economic dislocation, because “truck driver” was one of the most common jobs in America.

All of this turned out to be wrong. First of all, self-driving cars are a bust. Having absorbed hundreds of billions in R&D money, the self-driving car remains an embarrassment at best and a threat to human safety at worst.

But even if self-driving cars ever become viable (a big if!), truck-driver isn’t among the most common jobs in America — at least, not in the way that we were warned of.

The “3,000,000 truck drivers” who were supposedly at risk from self-driving tech are a mirage. The US Standard Occupational Survey conflates “truck drivers” with “driver/sales workers.” “Trucker” also includes delivery drivers and anyone else operating a heavy-goods vehicle.

The truckers who were supposedly at risk from self-driving cars were long-haul freight drivers, a minuscule minority among truck drivers. The theory was that we could replace 16-wheelers with autonomous vehicles who traveled the interstates in their own dedicated, walled-off lanes, communicating vehicle to vehicle to maintain following distance. The technical term for this arrangement is “a shitty train.”

What’s more, long-haul drivers do a bunch of tasks that self-driving systems couldn’t replace: “checking vehicles, following safety procedures, inspecting loads, maintaining logs, and securing cargo.”

But again, even if you could replace all the long-haul truckers with robots, it wouldn’t justify the sky-high valuations that self-driving car companies attained during the bubble. Long-haul truckers are among the most exploited, lowest paid workers in America. Transferring their wages to their bosses would only attain a modest increase in profits, even as it immiserated some of America’s worst-treated workers.

But the twin lies of self-driving truck — that these were on the horizon, and that they would replace 3,000,000 workers — were lucrative lies. They were the story that drove billions in investment and sky-high valuations for any company with “self-driving” in its name.

For the founders and investors who cashed out before the bubble popped, the fact that none of this was true wasn’t important. For them, the goal of successful self-driving cars was secondary. The primary objective was to convince so many people that self-driving cars were inevitable that anyone involved in the process could become a centimillionaire or even a billionaire.

This is at the core of bubblenomics. Back in 2017, the Long Island Iced Tea company changed its name to the “Long Blockchain Corp.” Despite the fact that it wasn’t actually doing anything with blockchains, and despite the fact that blockchains have turned out to be economically useless, the company’s stock valuation shot through the roof and its investors made a bundle.

Likewise, the supposed inevitability of self-driving cars — a perception that was substantially fueled by critics who repeated boosters’ lies as warnings, rather than boasts — spilled over into adjacent sectors, creating sub-bubbles, like the “drone-delivery bubble.”

It’s hard to see how “AI” chatbots will help Google improve search, but that doesn’t mean that Google shouldn’t be thinking hard about AI.

AI is an existential crisis for Google. These chatbots are such confident liars that they often trick real people — even experts — into believing and repeating wrong things.

Back in February, the economist Tyler Cowan published a bunch of startling quotes from Francis Bacon on the problems with the printing press.

The quotes were startling because Bacon never said them. They appear to have been “hallucinated” by a chatbot. Cowan —a prominent chatbot booster — believed them enough to publish them.

All right, fine. Everyone makes mistakes, but what does this have to do with Google?

Well, Google trusts Cowan’s website because he is considered an expert. When Cowan published the fabricated quotes, Google ingested them and started serving them back as search-results, laundering a chatbot’s lies into widely dispersed truths.

David Roth called Cowan’s mistake “the worst, most elementary journalistic sin[] regarding sourcing and accountability.” It’s true that journalists — and even bloggers — should double-check sources and quotes, especially juicy, surprising ones.

But the reality is that if a source is usually reliable — if it’s almost always reliable — then it is going to end up being widely trusted. Few people are going to double-check every single reference and citation (Though this is often a fruitful tactic! The expert AI critics Timnit Gebru and Emily Bender ascribe their excellence to “reading all the citations”)

This is classic “automation blindness.” If something usually works, it’s hard to remain vigilant to the possibility that it will fail. If it almost always works, vigilance becomes nearly impossible.

The problem isn’t that the chatbots lie all the time — it’s that they usually tell the truth, but then they start spouting confident lies.

Google is one of those sources that’s usually right, except when it isn’t, and that we end up trusting as a result. It’s very hard not to trust Google, but every now and again, that trust is drastically misplaced.

That means that when Google ingests and repeats a lie, the lie gets spread to more sources. Those sources then form the basis for a new kind of ground truth, a “zombie statistic” that can’t be killed, despite its manifest wrongness.

This isn’t a new problem for Google. In 2012, the writer Tom Scocca realized that every single recipe he followed instructed cooks to leave 5–10 minutes to caramelize onions. This is very wrong. Scocca set up a little test kitchen and demonstrated that the real time is “28 minutes if you cooked them as hot as possible and constantly stirred them, 45 minutes if you were sane about it.”

He published a great expose about the Big Onion Lie, which Google duly indexed and integrated into its “knowledge graph.” But because Google’s parser was stupid, the conclusion it drew from its analysis of Scocca’s article was that you should plan on caramelizing your onions in 5–10 minutes — the exact opposite conclusion to the one Scocca drew.

For years afterwards, anyone who typed, “How long does it take to caramelize onions” got a “knowledge panel” result over their search results that said “5–10 minutes” — and attributed that lie to Tom Scocca, the man who had debunked it.

Not everyone who typed “How long does it take to caramelize onions” into Google was looking to caramelize onions. Many of them were writing up recipes, and they repeated the lie. Google then re-indexed that lie and found more ways to repeat it. An unkillable zombie “fact” was risen.

Google has a serious AI problem. That problem isn’t “how to integrate AI into Google products?” That problem is “how to exclude AI-generated nonsense from Google products?”

Google is piling into solving the former problem, and in so doing, it’s making the latter — far more serious problem — worse. Google’s Bard chatbot is now advising searchers to visit nonexistent websites to purchase nonexistent products. As Google CEO Sundar Pichai told Verge editor-in-chief Nilay Patel, “I looked for some products in Bard, and it offered me a place to go buy them, a URL, and it doesn’t exist at all.”

And that’s before malicious actors start trying to deliberately trick Google’s chatbot. If the chatbot works well enough that we come to trust it, then the dividends for tricking it will be enormous. As Bruce Schneier and Nathan Sanders wrote,

Imagine you’re using an AI chatbot to plan a vacation. Did it suggest a particular resort because it knows your preferences, or because the company is getting a kickback from the hotel chain? Later, when you’re using another AI chatbot to learn about a complex economic issue, is the chatbot reflecting your politics or the politics of the company that trained it?

The risks of AI are real: AI really could result in huge numbers of workers being fired.

But just because a corporation fires a human and replaces them with software, it doesn’t follow that the software can do the human’s job. Executives are so incredibly horny to fire their workers that they will do so even if their replacements are inadequate, useless, or even counterproductive.

Remember when customer service lines were staffed by people who worked for the company and answered your questions? As hold-queues stretched, executives “contained costs” by replacing customer service with outsourced operators, or even automated speech-recognition systems that were incredibly bad.

They were bad, but they cheap. They didn’t satisfy customers, but they “satisficed” them. “Satisficing” is Nobel economist Herbert Simon’s word describing things that don’t “satisfy,” but do “suffice.”

[I]t’s best to understand Generative AI tools as cliche-generators. The AI isn’t going to give you the optimal Disney World itinerary; It’s going to give you basically the same trip that everyone takes. It isn’t going to recommend the ideal recipe for your tastes; it’s just going to suggest something that works…

Can a Generative AI produce a sitcom script? Yeah, it can. Will the script be any good? Meh. It will be, at best, average. Maybe it will be a creative kind of average, by remixing tropes from different genres (“Shakespeare in spaaaaaaaaace!”), but it’s still going to be little more than a rehash.

As it happens, I live in Burbank, home to two of the Big Four studios (Warner and Disney) and when I got home from my latest book-tour stop on Friday I dropped off my suitcase and joined the picket-line out front of Warner’s, joining Burbank’s mayor Konstantine Anthony on the line.

I’m not a screen-writer, but I am a novelist, and I’ve done enough screenplay work that I’m a member of a Hollywood union (The Animation Guild, IATSE Local 839). Walking the line, I got to talk to a number of Writers Guild of America West members who were out striking, and we traded war-stories about the studio execs who want to fire our asses and replace us with chatbots.

One writer was describing how frustrating execs’ “notes” can be. They call in writers with some kind of high concept, like, “Make me another Everything Everywhere All At Once, but make it horror, only like E.T.”

In other words, execs already treat writers like chatbots.

Title: “Interdimensional Shadows”

Genre: Horror

Logline: When a small-town teenager discovers a mysterious artifact, she unwittingly opens a gateway to a terrifying alternate dimension. With the help of her outcast friends, she must face interdimensional horrors while attempting to close the portal before the shadows consume their world.

That’s what Chatgpt spit out to that prompt. It then delivered six acts of nonsense on this theme, ending with “‘Interdimensional Shadows’ combines elements of horror and the supernatural, taking inspiration from ‘Everything Everywhere All At Once’ and ‘E.T.’ The film explores themes of grief, friendship, and the resilience of the human spirit when faced with unimaginable horrors from other dimensions.”

While this would be a rotten movie, it could certainly be pleasing to an exec who’s tired of writers pushing back against their “brilliant ideas.” It could satisfice quite well. Small wonder the studios are so in love with the idea of chatbots.

Studio execs’ painful ardor for firing writers doesn’t mean that it will work. That doesn’t really matter, at least not to Google’s shareholders, including its top execs. After announcing a suite of chatbot integrations into its products, Google’s share price shot up, making $9 billion for Google’s present and past executives.

The story that chatbots will replace humans doesn’t have to be true for it to be useful. As the writer Will Kaufman put it:

AI fearmongering is AI marketing. “It will replace people” is absolutely the value-add for AI. It’s why every C-suite in the country is suddenly obsessed.

“AI will gain sentience and replace people!!!”

“You mean we can cut payroll without cutting productivity???”

This goes a long way to explaining why Google execs are so exercised about AI’s “existential risks.” Take the incoherent story that a chatbot will become sentient and enslave humanity, which is like worrying that selective horse-breeding will eventually produce so much equine speed and stamina that horses will spontaneously transmute into diesel engines.

Meanwhile, the actual “AI” harms are deemed unimportant. As Signal president Meredith Whittaker put it, “it’s stunning that someone would say that the harms [from AI] that are happening now — which are felt most acutely by people who have been historically minoritized: Black people, women, disabled people, precarious workers, et cetera — that those harms aren’t existential.”

Whittaker added:

What I hear in that is, “Those aren’t existential to me. I have millions of dollars, I am invested in many, many AI startups, and none of this affects my existence. But what could affect my existence is if a sci-fi fantasy came to life and AI were actually super intelligent, and suddenly men like me would not be the most powerful entities in the world, and that would affect my business.”

She’s right, but beyond that point, warnings about “existential risk” from AI actually make AI companies more valuable. If AI is so powerful that it could bring about the end of the human race, it’s probably a great financial investment, right?

The irony is that the workers AI is best situated to replace are the execs who are pumping up the hype bubble. As Craig Francis said:

AI will replace C-suite people first… as it’s so much better/faster at taking in a lot of data and making decisions, often with less personal biases (though biases will still exist).

After all, “confident liar that doesn’t know when it’s lying” is a significant fitness factor for tech’s most successful executives. Or, as SaftyKuma put it:

Look at the typical output from ChatGPT: [it’s] sometimes impressive at first glance but under further scrutiny vapid, generic, full of untruths, and lacking any real deep, meaningful content.

The C-Suite are the very FIRST people ChatGPT should replace.

Catch me on tour with Red Team Blues in Toronto, DC, Gaithersburg, Oxford, Hay, Manchester, Nottingham, London, and Berlin!

Cory Doctorow (craphound.com) is a science fiction author, activist, and blogger. He has a podcast, a newsletter, a Twitter feed, a Mastodon feed, and a Tumblr feed. He was born in Canada, became a British citizen and now lives in Burbank, California. His latest nonfiction book is Chokepoint Capitalism (with Rebecca Giblin), a book about artistic labor market and excessive buyer power. His latest novel for adults is Attack Surface. His latest short story collection is Radicalized. His latest picture book is Poesy the Monster Slayer. His latest YA novel is Pirate Cinema. His latest graphic novel is In Real Life. His forthcoming books include Red Team Blues, a noir thriller about cryptocurrency, corruption and money-laundering (Tor, 2023); and The Lost Cause, a utopian post-GND novel about truth and reconciliation with white nationalist militias (Tor, 2023).