Ayyyyyy Eyeeeee

The lie that raced around the world before the truth got its boots on.

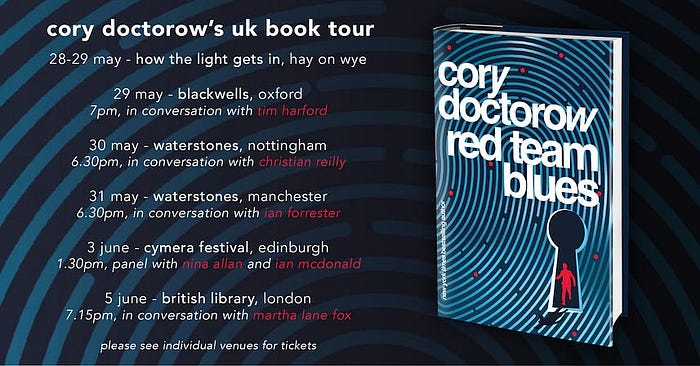

Tomorrow (June 5) at 7:15PM, I’m in London at the British Library with my novel Red Team Blues, hosted by Baroness Martha Lane Fox.

On Tuesday (June 6), I’m on a Rightscon panel about interoperability.

On Wednesday (June 7), I’m keynoting the Re:publica conference in Berlin.

On Thursday (June 8) at 8PM, I’m at Otherland Books in Berlin.

It didn’t happen.

The story you heard, about a US Air Force AI drone warfare simulation in which the drone resolved the conflict between its two priorities (“kill the enemy” and “obey its orders, including orders not to kill the enemy”) by killing its operator?

It didn’t happen.

The story was widely reported on Friday and Saturday, after Col. Tucker “Cinco” Hamilton, USAF Chief of AI Test and Operations, included the anaecdote in a speech to the Future Combat Air System (FCAS) Summit.

But once again: it didn’t happen:

“Col Hamilton admits he ‘mis-spoke’ in his presentation at the FCAS Summit and the ‘rogue AI drone simulation’ was a hypothetical “thought experiment” from outside the military, based on plausible scenarios and likely outcomes rather than an actual USAF real-world simulation,” the Royal Aeronautical Society, the organization where Hamilton talked about the simulated test, told Motherboard in an email.

The story got a lot more play than the retraction, naturally. “A lie is halfway round the world before the truth has got its boots on.”

Why is this lie so compelling? Why did Col. Hamilton tell it?

Because it’s got a business-model.

There are plenty of things to worry about when it comes to “AI.” Algorithmic decision-support systems and classifiers can replicate the bias in their training data to reproduce racism, sexism, and other forms of discrimination at scale.

When a bank officer practices racial discrimination in offering a loan, or when an HR person passes over qualified women for senior technical roles, or a parole board discriminates against Black inmates, or a Child Protective Services officer takes indigenous children away from their parents for circumstances under which white parents would be spared, it’s comparatively easy to make the case that these are discriminatory practices in which human biases are enacted through corporate or governmental action.

But when an “algorithm” does it, the process is empiricism-washed. “Math can’t be racist,” so when a computer produces racism, those outcomes reflect empirical truth, not bias. Which is good news, because these algorithms are used to produce decisions at a scale that exceeds any human operator’s ability to closely supervise them. There’s never a “human in the loop,” not in any meaningful way: automation inattention means that humans who supervise automated processes are ornamental fig-leafs, not guardians.

Bad training data produces bad training. “Garbage in, garbage out” is the iron law of computing, and machine learning doesn’t repeal it. Moreover, the quality of the datasets in use is very poor.

Machine learning systems don’t just reproduce and amplify bias — they are also vulnerable to attacks on training data ordering and on data itself. These attacks allow insiders to insert back doors into models that permit them to manipulate the models’ output — say, by causing a summarizer to give subtly biased summaries of large quantities of text.

But even without insider threats, models contain intrinsic defects, the “adversarial examples” that cause otherwise reliable classifiers to produce gibberish, like mistaking a turtle for an assault rifle. Adversarial examples are easy to discover, and, worse still, easy to invoke — a tiny sticker can make humans invisible to human-detection systems, or cause a car’s autopilot to steer into oncoming traffic, or pass secret, inaudible instructions onto your smart speaker.

As if that weren’t enough, there’s the problem of “reward hacking”: ask a system to maximize some outcome and it will, even if that means cheating.

Take the Roomba that was programmed to minimize collisions. The Roomba had a collision-sensor on its front panel, and the algorithm was designed to seek out ways of patrolling a room with as few bumps into that sensor as possible.

After some trial and error, the algorithm hit on a very successful strategy: have the Roomba run in reverse, bashing into all kinds of furniture and slowly battering itself to pieces . But because the collision sensor was on the front of the Roomba, and all of the collisions were to the back of the Roomba, the “reward function” was satisfied.

The solution to minimizing collisions turned out to be maximizing collisions.

Which brings us back around to Col. Hamilton’s story of the drone that killed its operator. On its face, this is a standard story of how reward hacking can go wrong. On its face, it’s a pretty good argument against the use of autonomous weapons altogether. On its face, it’s pretty weird to think that Col. Hamilton would make up a story that suggested that his entire department should be shut down.

But there’s another aspect to Hamilton’s fantasy about the blood-lusting, operator-killing drone: this may be a dangerous weapon, but it is also a powerful one.

A drone that has the “smarts” to “realize” that its primary objective of killing enemies is being foiled by a human operator in a specific air-traffic control tower is a very smart drone. File the rough edges off that bad boy and send it into battle and it’ll figure out angles that human operators might miss, or lack the situational awareness to derive. Put that algorithm in charge of space-based nukes and tell the world that even if your country bombs America into radioactive rubble, the drones will figure out who’s responsible and nuke ’em ’till they glow! Yee-haw, I’m the Louis Pasteur of Mutually Assured Destruction!

Tech critic Lee Vinsel coined the term “criti-hype” to describe criticism that incorporates a self-serving commercial boast. For years, critics of Facebook and other ad-tech platforms accepted and repeated the companies’ claims of having “hacked our dopamine loops” to control our behavior.

These claims are based on thin, warmed-over notions from the largely deprecated ideas of behaviorism, which nevertheless bolstered Facebook’s own sales-pitch:

Why should you pay a 40 percent premium to advertise with Facebook? Let us introduce you to our critics, who will affirm that we are evil, dopamine-hacking sorcerers who can rob your potential customers of their free will and force them to buy your products.

By focusing on Facebook’s own claims about behavior modification, these critics shifted attention away from Facebook’s real source of power: evading labor and tax law, using predatory pricing and killer acquisitions to neutralize competitors, showering lawmakers in dark money to forestall the passage and/or enforcement of privacy law, defrauding advertisers and publishers, illegally colluding with Google to rig ad markets, and using legal threats to silence critics.

These are very boring sins, the same tactics that every monopolist has used since time immemorial. Framing Facebook as merely the latest clutch of mediocre sociopaths to bribe the authorities to look the other way while it broke ordinary laws suggests a pretty ordinary solution: enforce those laws, round up the miscreants, break up the company.

However, if Facebook is run by evil sorcerers, then we need to create entirely novel anti-sorcery measures, the likes of which society has never seen. That’ll take a while, during which time, Facebook can go on committing the same crimes as Rockefeller and Carnegie, but faster, with computers.

And best of all, Facebook can take “evil sorcerer” to the bank. There are plenty of advertisers, publishers, candidates for high office, and other sweaty, desperate types who would love to have an evil sorcerer on their team, and they’ll pay for it.

If the problem with “AI” (neither “artificial,” nor “intelligent”) is that it is about to become self-aware and convert the entire solar system to paperclips, then we need a moonshot to save our species from these garish harms.

If, on the other hand, the problem is that AI systems just suck and shouldn’t be trusted to fly drones, or drive cars, or decide who gets bail, or identify online hate-speech, or determine your creditworthiness or insurability, then all those AI companies are out of business.

Take away every consequential activity through which AI harms people, and all you’ve got left is low-margin activities like writing SEO garbage, lengthy reminisces about “the first time I ate an egg” that help an omelette recipe float to the top of a search result. Sure, you can put 95 percent of the commercial illustrators on the breadline, but their total wages don’t rise to one percent of the valuation of the big AI companies.

For those sky-high valuations to remain intact until the investors can cash out, we need to think about AI as a powerful, transformative technology, not as a better autocomplete.

We literally just sat through this movie, and it sucked. Remember when blockchain was going to be worth trillions, and anyone who didn’t get in on the ground floor could “have fun being poor?”

At the time, we were told that the answer to the problems of blockchain were exotic, new forms of regulation that accommodated the “innovation” of crypto. Under no circumstances should we attempt to staunch the rampant fraud and theft by applying boring old securities and commodities and money-laundering regulations. To do that would be to recognize that “fin-tech” is just a synonym for “unlicensed bank.”

The pitchmen who made out like bandits on crypto — leaving mom-and-pop investors holding the bag — are precisely the same people who are beating the drum for AI today.

These people have a reward function: “convince suckers that AI is dangerous because it is too powerful, not because it’s mostly good for spam, party tricks, and marginal improvements for a few creative processes.”

If AI is an existential threat to the human race, it is powerful and therefore valuable. The problems with a powerful AI are merely “shakedown” bugs, not showstoppers. Bosses can use AI to replace human workers, even though the AI does a much worse job — just give it a little time and those infelicities will be smoothed over by the wizards who created these incredibly dangerous and therefore powerful tools.

We’ve seen this movie, too. Remember when banks, airlines and other companies fired all their human operators and replaced them with automated customer support voice-recognition systems? These systems are useless — for customers — but great for bosses.

The purpose of these support bots is to weed out easily discouraged complainants, acting as a filter that reduces a flood of complaints to a trickle that can be handed off to outsourced human customer-support agents in overseas boiler rooms.

These humans are also a filter, only permitted to say, “I’m sorry, but there’s nothing that can be done.” If you persist long enough with one of these demoralized, abused, underpaid human filters, you may eventually be passed on to a “supervisor,” one of the very few people who actually work for the company and actually have the power to help you.

Using “automation” allows a company to convince 99 percent of the people who would otherwise get some form of expensive remediation to simply suck it up and go away. It also lets the company slash its wage bill, replacing most of its operators with semifunctional software, and nearly all of the remainders with ultra-low-waged subcontractors.

Provided that the market is sufficiently concentrated that customers have no alternative — airlines, tech giants, phone companies, ISPs, etc — the system works beautifully.

The story that AI sophistication is on a screaming hockey-stick curve headed to the moon lets companies who replace competent humans with shitty algorithms claim that we are simply experiencing a temporary growing pain — not a major step towards terminal enshittification.

The tech bros who have spent the past year holding flashlights under their chins and intoning “Ayyyyyy Eyeeeee” in their very spookiest voices want us to think they’re evil sorcerers, not huxters whose (profitable) answer to the problems of our existing systems is to make them worse — but faster, and cheaper.

This is a very profitable shuck. It allowed these same people to extract $100,000,000,000 from the capital markets on the imaginary promise of self-driving cars, a fairy tale that was so aggressively pumped that millions of people continue to believe the claim that it’s just one more year away, despite this promise having been broken for nine consecutive years.

The story that AI is an existential risk — rather than a product of limited utility that has been shoehorned into high-stakes applications that it is unsuited to perform — lets these guys demand “regulation” in the form of licensing regimes that would prohibit open source competitors, or allow also-ran companies to catch up with a product whose marketing has put them in the shade.

Best of all, arguments about which regulation we should create to stop the plausible sentence machine from becoming sentient and reducing us all to grey goo are a lot more fun — and profitable — than arguments about making it easier for workers to unionize, or banning companies from practicing wage discrimination, or using anticompetitive practices to maintain monopolies.

So long as Congress is focused on preventing our robot overlords from emerging, they won’t be forcing these companies to halt discriminatory hiring and rampant spying.

Best of all, the people who get rich off this stuff get to claim to be evil sorcerers, rather than boring old crooks.

Catch me on tour with Red Team Blues in London and Berlin!

Cory Doctorow (craphound.com) is a science fiction author, activist, and blogger. He has a podcast, a newsletter, a Twitter feed, a Mastodon feed, and a Tumblr feed. He was born in Canada, became a British citizen and now lives in Burbank, California. His latest nonfiction book is Chokepoint Capitalism (with Rebecca Giblin), a book about artistic labor market and excessive buyer power. His latest novel for adults is Attack Surface. His latest short story collection is Radicalized. His latest picture book is Poesy the Monster Slayer. His latest YA novel is Pirate Cinema. His latest graphic novel is In Real Life. His forthcoming books include Red Team Blues, a noir thriller about cryptocurrency, corruption and money-laundering (Tor, 2023); and The Lost Cause, a utopian post-GND novel about truth and reconciliation with white nationalist militias (Tor, 2023).